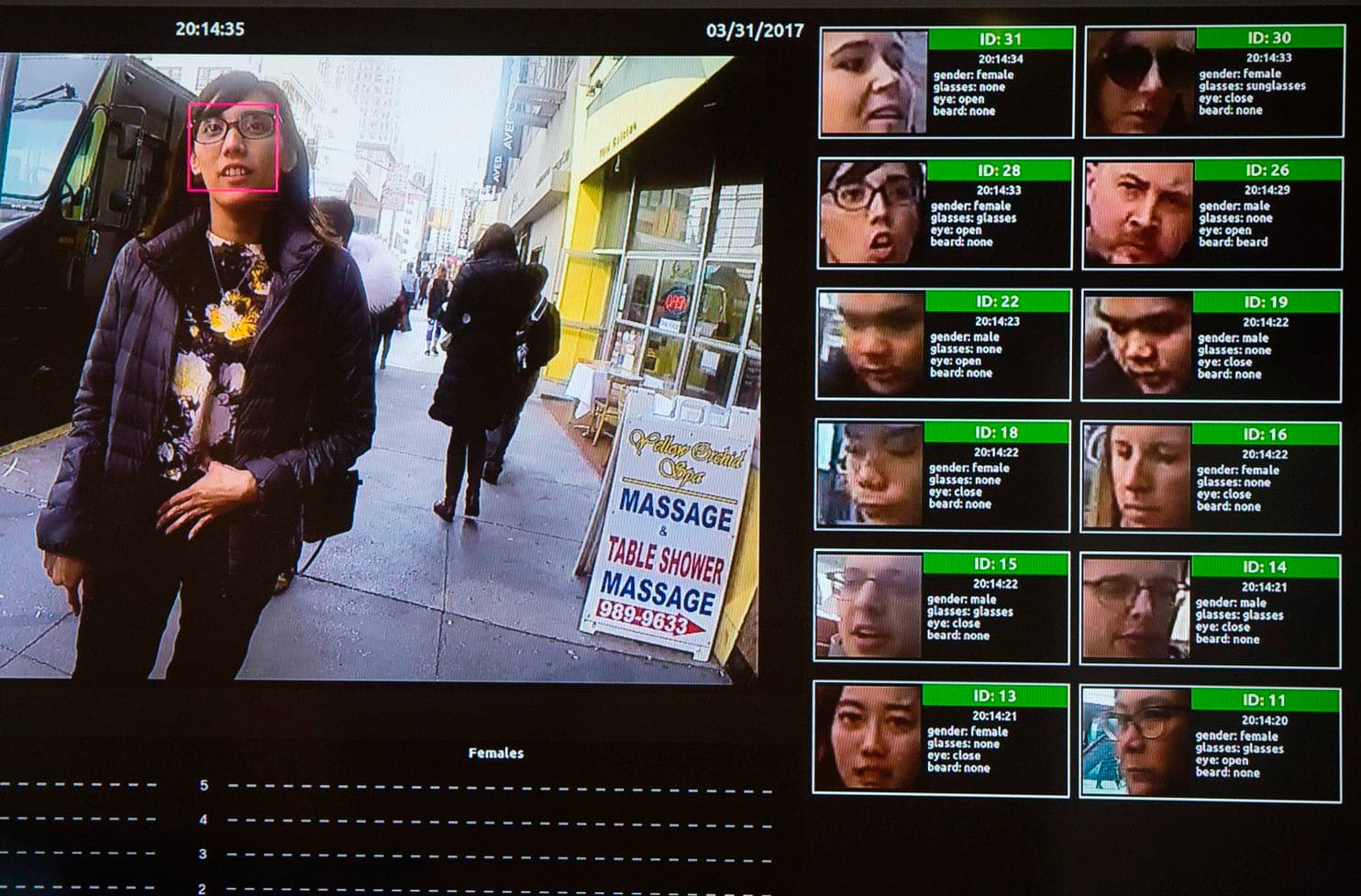

With presidential shenanigans, Brexit, and a multitude of other issues keeping people shocked or entertained, the quiet near-term deployment of facial recognition systems within twenty top U.S. airports over the next several years has caught the public off-guard.

BuzzFeed journalist Davey Alba recently produced a highly credible and in-depth report on the use and deployment of “biometric verification of identities” at U.S. airports. The facial recognition systems are being touted as a user-friendly and convenient means of travel. Yet, if you scratch the surface, this insidious and legally gray area of technology encroachment on daily life will undoubtedly test societal boundaries. It also raises questions about the increased prevalence of potentially nefarious use of data once captured.

The rightful chorus of concern about privacy has erupted. However, of most concern to me is not only the computer code which makes up the core of the systems, but also the lack of technology companies’ understanding in designing and deploying systems which will inherently change culture and society.

Enculturation of Technology

Technology has innately had the perception of being non-cultural. Its base binary code is essentially on or off. As such, it seems non-subjective, scientific, and neutral. But this couldn’t be further from the truth. We have already seen Facebook used to alter democracy and artificial intelligence (AI) trained to be a serial killer.

Behind all contemporary technology are armies of computer programmers from all walks of life, each carrying with them a personalized world view which they subconsciously code into the neutral network we call technology. In anthropological terms, this is enculturation.

To develop facial recognition systems that can scrape, combine, and return a result, programmers need source material so that algorithms can learn how to recognize human faces. Recently, in a misguided effort to address inherent enculturation, IT company IBM decided to build and teach an AI facial recognition system by scraping a million facial images from Flickr.

Not only was this material used beyond the scope of its intended use, the IBM programmers would test images against 200 values which encompassed everything from skin tone, assumed gender, estimated age, and a myriad of other interpreted facial “qualities.” These libraries of pre-ordained values, produced by primarily non-humanities trained computer programmers, are at the source of the enculturation issue.

I also question the use of random data sets pulled from the “wild,” where intent and the image captured have their own encultured process and as such, aren’t intended to be used by facial recognition software as training sets.

Artificial Intelligence and Bias

Joy Buolamwini, an esteemed Rhodes Scholar and founder of Algorithmic Justice League, hits to the heart of the problem of algorithmic bias that she calls the “coded gaze.” In her recent research, 1270 images were selected from three African and three European countries and grouped by gender and skin types. IBM, Microsoft, and Face++ were used to compare and contrast accuracy rates in detecting a broader range of facial types and variations.

All three companies had a range of roughly 88-94 percent accuracy. However, lighter skin facial recognition was much more accurate than darker skin recognition. Further, darker skinned females had an almost 35 percent error rate. Even worse was that all three facial recognition systems misidentified over 96 percent of females of color as male.

Lastly, there is no accurate statistic on the total amount of facial deformities in the world. The variance and degrees of facial types due to birth, accident or environmental exposure is staggering. Combat facial injuries among veterans pose an interesting dilemma for this race to deploy facial recognition systems as a means of accurately identify people. What about identical twins, or doppelgängers? There is little research on how to respectfully encode these variables within facial recognition systems as yet.

Tip of the Iceberg

Facial accuracy is only the tip of the iceberg. If China’s Orwellian deployment of facial recognition system under the guise of “traveler convenience” at airports, shopping centers, train stations, and other public spaces for the sole purpose of enforcing a regime-like society isn’t enough to convince any Western government of the immense danger this poses to its citizens, I worry that the genie is already out of the bottle and we will have lost all rights to our identities.

Wow! China Airport face recognition systems to help you check your flight status and find the way to your gate. Note I did not input anything, it accurately identified my full flight information from my face! pic.twitter.com/5ASdrwA7wj

— Matthew Brennan (@mbrennanchina) March 24, 2019

Our face is our unique map etched by personal experiences, the environments we have encountered, and the journeys we make. In many ways, it is like our fingerprints, unique and indelible. However, our face is being measured against a myriad of variables programmed by armies of people who bring their own biases to the table.

Once captured and then stored, it is backed up, copied, and distributed to governmental and commercial partners alike. The immense value of that data outstrips the sensibilities of preserving public privacy, which will result in a fairly quick loss of your identity, your most valuable asset. In our mad rush to embrace the fallacy of a world made better through technology, we will lose what is unique about the human condition: our humanity.

Disclaimer: The views and opinions expressed here are those of the author and do not necessarily reflect the editorial position of The Globe Post.